run:ai

AI Optimization and Orchestration

Run:ai is a Kubernetes-based software platform that provides advanced orchestration and management capabilities for GPU compute resources, specifically tailored for AI and deep learning workloads. Here are the key points about Run:ai:

It is a software solution built on top of Kubernetes, designed to optimize GPU utilization and scheduling for AI workloads.

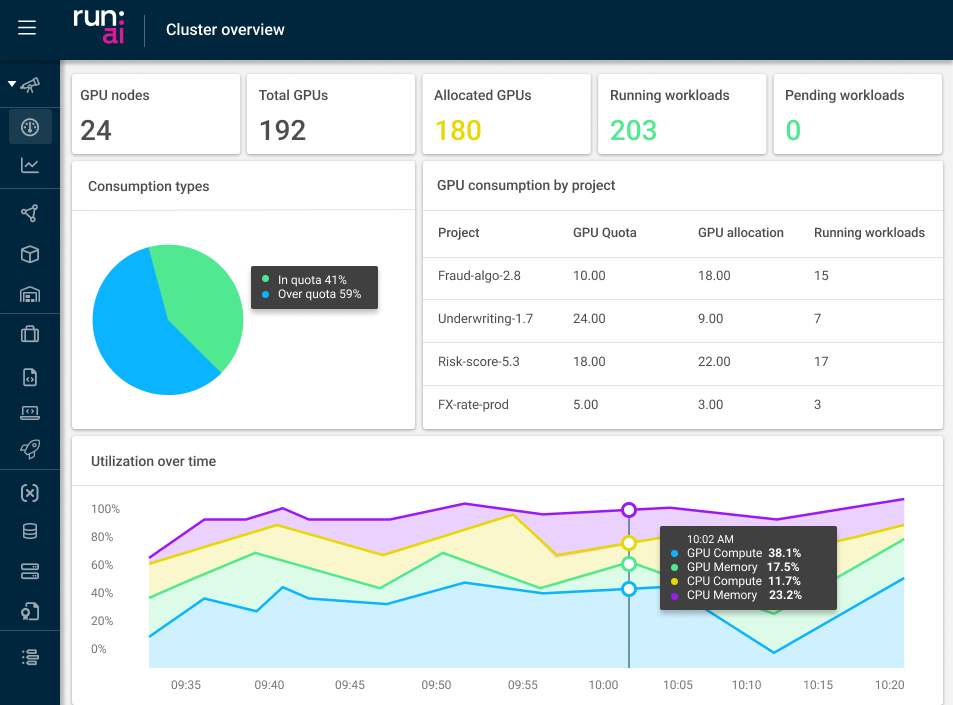

Run:ai enables features like GPU pooling, GPU fractioning (splitting GPUs), and dynamic scheduling to maximize efficiency and resource utilization.

It provides a centralized interface to manage shared GPU infrastructure, allocate resources, set quotas and priorities, and monitor usage across on-premises, cloud, or hybrid environments.

Run:ai integrates with Nvidia's AI stack, including DGX systems, NGC containers, and AI Enterprise software, offering a full-stack solution optimized for AI workloads.

It supports all major Kubernetes distributions and can integrate with third-party AI tools and frameworks.

Run:ai is used by enterprises across various industries to manage large-scale GPU clusters for AI deployments, including generative AI and large language models.